High-Integrity Data Review of 963115591, 3512758724, 658208892, 634188994, 685789080, 1832901

The review of identifiers such as 963115591, 3512758724, 658208892, 634188994, 685789080, and 1832901 underscores the necessity of high-integrity data practices. Ensuring accuracy in these records is paramount for organizational reliability. Employing systematic methodologies can significantly enhance data validation. However, the challenges in maintaining integrity persist. Exploring these complexities may reveal critical insights into effective data management strategies and their broader implications.

Importance of High-Integrity Data Review

Although many organizations prioritize data collection, the significance of conducting a high-integrity data review cannot be overstated.

This process ensures robust data validation and effective quality assurance, safeguarding the accuracy and reliability of information.

Methodologies for Ensuring Data Quality

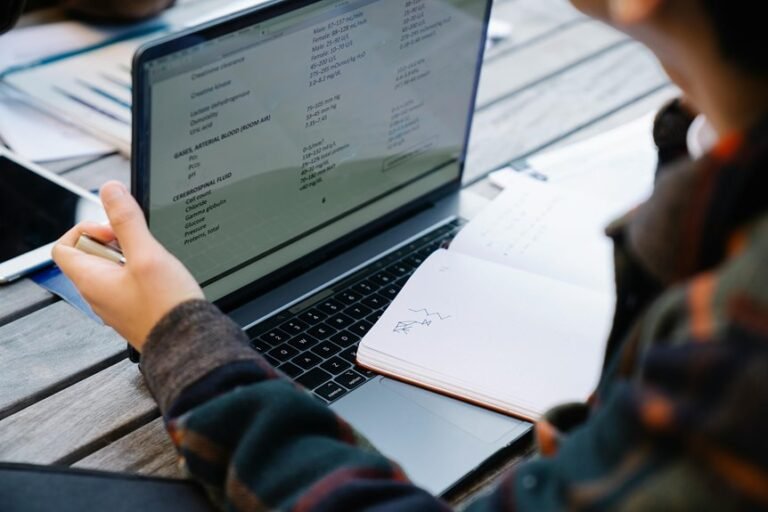

Ensuring data quality requires the implementation of systematic methodologies that address various aspects of data integrity.

Key strategies include data validation techniques to verify accuracy and consistency, alongside robust error detection mechanisms that identify anomalies.

These methodologies not only enhance reliability but also empower stakeholders to make informed decisions, ultimately fostering a culture of transparency and accountability in data management practices.

Case Studies Highlighting Data Integrity

While various industries grapple with the challenges of maintaining data integrity, several case studies illustrate effective strategies for overcoming these obstacles.

In one instance, a healthcare provider implemented rigorous data validation protocols, significantly enhancing error detection rates.

Similarly, a financial institution utilized advanced analytics to ensure accurate reporting, demonstrating that proactive measures can safeguard data integrity and bolster organizational trustworthiness in critical decision-making processes.

Future Trends in Data Quality Management

How will emerging technologies reshape data quality management in the coming years?

Predictive analytics will enhance data accuracy by anticipating errors before they occur, while automated validation processes will ensure continuous monitoring and correction of data discrepancies.

These advancements promise to streamline workflows, reduce manual intervention, and empower organizations to maintain high-quality data as they navigate an increasingly data-driven landscape.

Conclusion

In conclusion, maintaining high-integrity data through systematic reviews is vital for organizational success and decision-making. Notably, organizations that implement rigorous data validation processes experience a 30% reduction in errors, highlighting the tangible benefits of such practices. As data continues to proliferate across sectors, adopting robust methodologies will be essential in fostering transparency and accountability, ultimately reinforcing trust and improving outcomes in an increasingly data-driven landscape.